Researchers found a way of hijacking voice assistants like Google Home, Amazon’s Alexa or Apple’s Siri devices from hundreds of feet away using shining laser pointers. Devices running Google Assistant, Amazon Alexa and Siri were all shown to be vulnerable to this security hole, and the researchers got it working on a Facebook Portal device, too. Even phones and tablets were shown to be vulnerable.

The research team demonstrated how they were able to "speak" to smart speakers and smartphones running Google's Assistant, Amazon’s Alexa or Apple’s Siri using the lasers, even getting them to perform tasks like opening a garage door. Researchers tricked a Google Home into opening a garage door from 230 to 350 feet away, by focusing lasers with a telephoto lens.

Opening the garage door was easy, the researchers said. With the light commands, the researchers could have hijacked any digital smart systems attached to the voice-controlled assistants.

The researchers explained that they were able to shine a light that had a command encoded in it at a microphone built into a smart speaker. The sounds of each command were encoded in the intensity of a light beam. "Microphones convert sound into electrical signals. The main discovery behind light commands is that in addition to sound, microphones also react to light aimed directly at them”. The light would hit the diaphragm built into the smart speaker's microphone, causing it to vibrate in the same way as if someone had spoken that command.

The lasers basically trick the microphones into making electrical signals as if they're hearing someone's voice. A hacker could seemingly use this method to buy stuff online, control smart home switches and remotely unlock and start a car that's linked to the speaker. “It’s difficult to know how many products are affected, because this is so basic.”

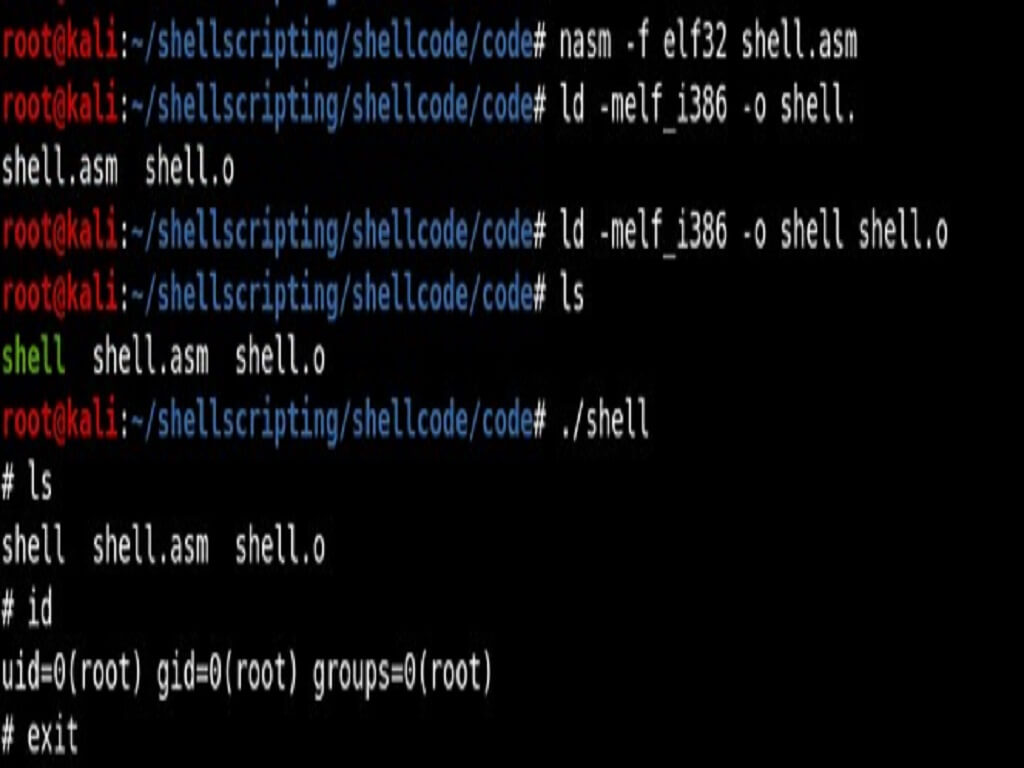

To exploit It requires specific tools like a laser pointer, laser driver, sound amplifier, a telephoto lens, and more. Moreover, the attacker has to plan the attack accordingly because he/she can be caught easily when the laser beam was aiming at the speaker.

By shining the laser through the window at microphones inside smart speakers, tablets, or phones, a faraway attacker can remotely send inaudible and potentially invisible commands which are then acted upon by Alexa, Portal, Google assistant or Siri.

Making things worse, once an attacker has gained control over a voice assistant, the attacker can use it to break other systems. For example, the attacker can:

- Control smart home switches

- Open smart garage doors

- Make online purchases

- Remotely unlock and start certain vehicles

- Open smart locks by stealthily brute forcing the user's PIN number.

why does this happen?

Microphones convert sound into electrical signals. The main discovery behind light commands is that in addition to sound, microphones also react to light aimed directly at them. Thus, by modulating an electrical signal in the intensity of a light beam, attackers can trick microphones into producing electrical signals as if they are receiving genuine audio.

The researchers have informed Amazon, Apple, Google about this security issue. A Google spokesperson said "We are closely reviewing this research paper. Protecting our users is paramount, and we're always looking at ways to improve the security of our devices," in an emailed statement.

A spokesperson from Amazon said in an emailed statement that they have been engaging with researchers to better understand their work, "Customer trust is our top priority and we take customer security and the security of our products seriously."

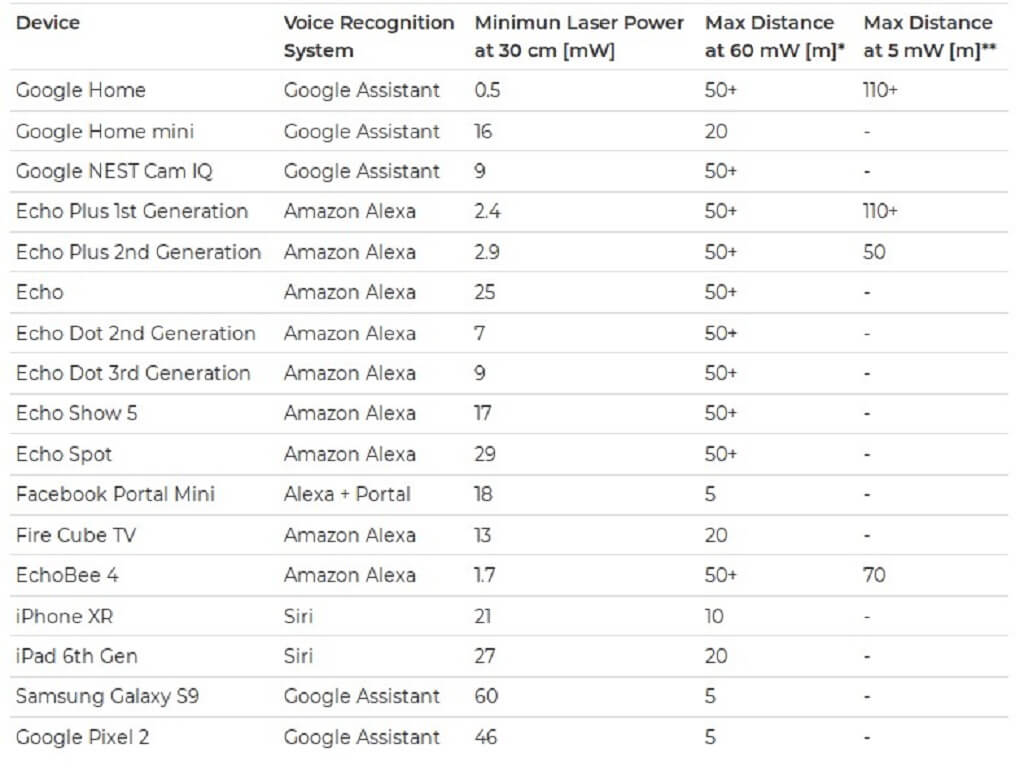

Which devices are susceptible to Light Commands?